Blog

Determining Minimum Survey Sample Sizes Based on Survey Margin of Error

When conducting a research study based on a survey, if data quality is important to you, then two of the (many) factors you should consider are “survey margin of error” and “sample size.” For those new to survey-based research studies, these are two vocabulary terms you need to know:

- Sample size: The quantity of people who fully complete your survey.

- Survey margin of error: The amount of random sampling error in the results of a survey. The larger the survey margin of error, the less confidence one should have in the results of the survey.

NOTE: There are many survey margin of error calculators available on the Internet. Here’s one that CyberEdge commonly uses from SurveyMonkey:

https://www.surveymonkey.com/mp/margin-of-error-calculator/

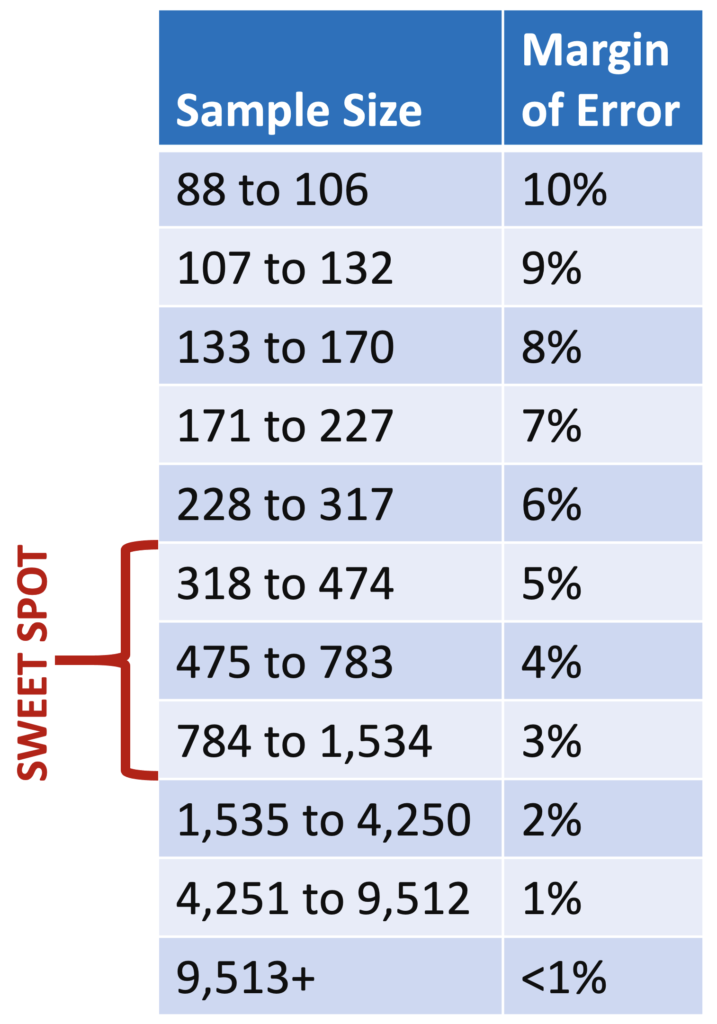

Let’s say you were attempting to determine the percentage of organizations that were compromised by a successful cyberattack last year. And let’s say that the figure is 81%. (Actual statistic from CyberEdge’s 2020 Cyberthreat Defense Report.) If your sample size was 100 people, then your survey margin of error (at a 95% confidence level, which is industry standard) would be 10% – meaning that the “actual” figure is likely between 71% and 91% (i.e., plus or minus 10%). If your sample size was 1,200 people, like in our report, then survey margin of error would be 3% – meaning that the “actual” figure is likely between 78% and 84%. That’s a huge difference!

When you conduct survey-based research, you not only want to generate leads from the report, but you also want your data to be reliable and actionable. In CyberEdge’s view, this means never conducting a survey report that yields a survey margin of error higher than 5%. The “sweet spot” for us is between 3% and 5%. (The next time you see a political or other poll presented by a major news outlet, check out the survey margin of error in the footnote beneath the graphic. The odds are near certain that it will fall between 3-5%.)

Unfortunately, there’s a myth held by novice researchers that it’s impossible to know the true survey margin of error because you don’t know the population of the people you’re surveying. Well, although it’s true you never know the exact population, the ‘good news’ is that it just doesn’t matter. Let’s say, for example, you’re conducting a survey of IT security professionals in the United States who work for organizations with 1,000 or more employees. Although you don’t know that exact population, the survey margin of error (based on a given sample size) is the same whether that population is 100,000 people, 1 million people, or 10 million people!

The following table compares sample sizes with corresponding survey margin of error. The results are the same whether the population is 1 million people or 10 million people. Don’t believe it? Click the link above and around with SurveyMonkey’s margin of error calculator yourself!

Quick sidebar: Unless you’re covering numerous countries in your survey-based research study, there’s really no need to exceed 800 respondents. Is a 2% margin of error with 1,600 respondents, for example, that much different than a 3% margin of error with 800 respondents when making decisions based on actionable data? We think not.

Quick sidebar: Unless you’re covering numerous countries in your survey-based research study, there’s really no need to exceed 800 respondents. Is a 2% margin of error with 1,600 respondents, for example, that much different than a 3% margin of error with 800 respondents when making decisions based on actionable data? We think not.

Over the years, CyberEdge has seen IT vendors and (shockingly) IT research firms either withhold sample size data from their survey reports (a despicable practice) or reference a sample size so low (under 300 people) it makes your skin feel itchy. Sure, generating leads from your internal or third-party survey report is important. But so is looking at yourself in the mirror, knowing that you’re promoting high-quality data that will ultimately reflect on you and your employer.

To learn about other ways to maximize the quality of your survey report data, check out our blog titled, Five Easy Ways to Maximize Survey Data Quality.